The Golden Rule for Django Developers is “Don’t prematurely optimize code.” But when a website goes live, user demand can outweigh site functionality, meaning slower page loads and a downgraded user experience. Code optimization is no longer a waste of time—it’s required.

There are several ways to optimize, but scaling out horizontally to deal with growing demand gets expensive. Luckily, there’s still a way to salvage your code without breaking the bank: You can cache.

But what is cache?

Caching is a fancy word for being lazy or, more accurately, letting your servers be lazy. The concept is that we can reuse what we’ve done before so that we don’t have to needlessly redo it. A cache, therefore, is an ephemeral memory bank that provides quick access to previously computed data where you need it, when you need it.

To explain how caching fits into a computing system, we can look at a standard web infrastructure. Let’s use Amazon’s:

-

- There’s an Elastic Load Balancer (a device that spreads capacity across many servers) that goes into a cluster of EC2 Servers (which allow for scaled computing)

- The EC2 Servers dump some static content into S3 Buckets (a static file storage)

- The EC2 Servers then talk to an RDS Backend (a durable database) to get things done

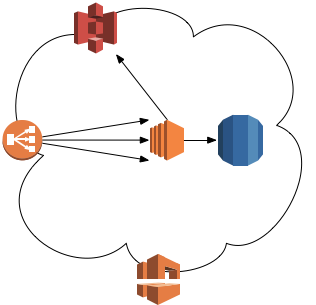

Now, let’s look at that same system with the addition of caching.

-

- A downstream cache is coded before the Elastic Load Balancer and in front of the S3 bucket

- The EC2 servers then talk to both the RDS and an upstream Elastic Cache, which is Amazon’s trademarked temporary database

We added the downstream cache to deliver pages faster with fewer server resources by re-serving a page generated for one request to future requests for the same page. Upstream cache, on the other hand, can deliver pages faster with fewer server resources by re-using calculated values and content fragments generated during one page view for future page views. Both can transform the speed of your system. The bulleting below summarizes the two options in terms of placement and purpose:

-

- Upstream caches are services your servers talk to that live further upstream, away from the user

-

- These caches can include computed data, content fragments, and syndicated content

- Examples: Memcache and Redis

-

- Downstream caches are services that users talk to that live further downstream from your servers, toward the user

-

- These caches are at the page-level of a website

- Examples: Cloudfront and other CDNs, or a Squid or Varnish proxy server

-

- Upstream caches are services your servers talk to that live further upstream, away from the user

So upstream cache’s purpose is to save the results of expensive calculations so that they don’t need to be done again only to provide the same result. To be clear, this isn’t denormalization but rather an entirely temporary hub of data..

Upstream caching is dependent on a definition of its longevity. You can set a cache key to live for a fixed lifetime or your code can invalidate a cache key when it’s no longer useful, depending on the purpose of your cache. This approach requires far less code complexity for situations where slightly stale data, like 1-hour old data, and content are acceptable to your application’s business requirements.

Invalidated cache values make it so that, despite the time that has passed, when new, more relevant values come along, they’ll simply replace the old cache. You can ensure an always-fresh message board by using an invalidated cache setup, for example, but you should be aware that invalidating cache keys is a complex and highly application-specific solution.

There are good places in your code to use upstream cache and then there are times that you should definitely reconsider it. Before you cache, consider the following:

Good Times to use Upstream Cache:

-

- Properties are good for caching. In Python, these are denoted with object methods decorated with the @property decorator. Since properties take no arguments yet perform calculations, per object caching of property values often saves you from needlessly repetitive calculations.

- Compiled things, like templates, are good for caching because they can be pickled and serialized to avoid redundant computation steps.

- Generated things, like video clips and thumbnails, are good candidates because, unless the original source has changed, there’s no need to generate them more than once.

- Aggregated things, like comment counts on a blog post, are also good things to cache because you’ll avoid unnecessary iteration and counting.

Bad Times to use Upstream Cache:

-

- Rarely accessed or highly volatile things are bad caching candidates because you’ll waste space or you’ll cache something that’s probably going to change before you need it again.

- Computed values that need to be durably stored are bad to cache, too, because cache is temporary by nature and things that you need permanently will be safer living in your database.

Think Twice about Upstream Caching for These Scenarios:

-

- ORM Objects, or Object-Relational Mapper Objects, can be cached but it’s not necessarily wise because saving a cached instance of an object may overwrite changes to the database since the cached object was last generated.

- User-Specific things can be cached but that may lead to privacy invasion, not to mention they might fall under “rarely accessed things.”

- Admin-only things should be heavily evaluated before caching, too, because that could lead to sensitive information being publicly leaked. Good examples of this are CMSs and draft copies of your work.

When in doubt, think twice. Caching is incredibly useful but it’s not a fit for every circumstance. Plan ahead for the scenarios that could disrupt your code optimization efforts, and perform some Django testing before committing to a caching solution.